In a move that’s set to shake up the AI industry, Anthropic has announced the release of its new Prompt Caching technology. This innovative approach promises to boost the performance of large language models, potentially revolutionizing how we interact with AI systems.

What is Prompt Caching?

At its core, prompt caching is like giving AI a cheat sheet for common questions. Instead of thinking through every query from scratch, the AI can quickly pull up pre-computed answers for frequently asked questions. It’s akin to how we humans might memorize common facts to recall them instantly when needed.

Read more: Anthropic Unveils Claude 3.5 Sonnet: A New Benchmark in AI Performance

The Potential Game-Changing Benefits

Dr. Sarah Johnson, an AI researcher at Stanford University, explains: “Prompt caching could be a real game-changer. We’re talking about AI systems that can respond in the blink of an eye to common queries. This isn’t just about speed – it’s about making AI more accessible and useful in our daily lives.”

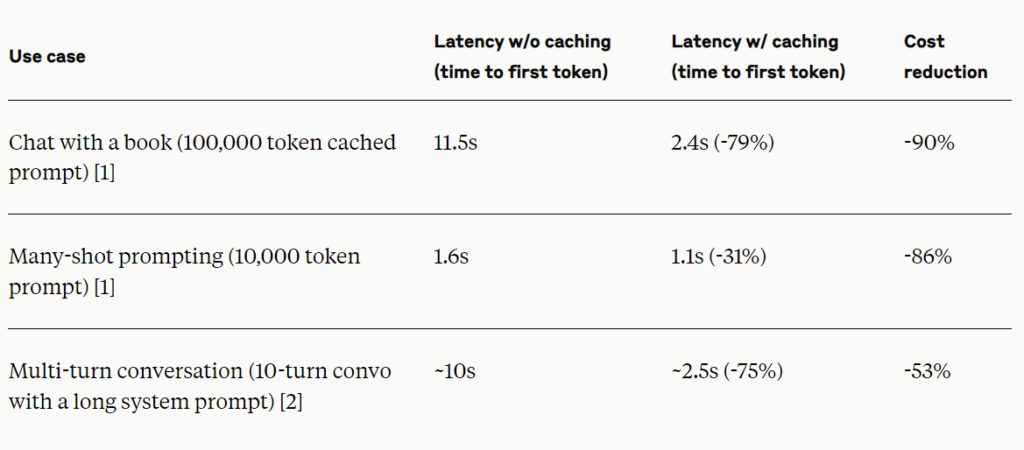

The benefits of Prompt Caching technology extend beyond just faster responses:

- Energy Efficiency: By reducing the need for constant heavy computations, prompt caching could significantly cut down on energy consumption. In an age where the environmental impact of AI is under scrutiny, this is a welcome development.

- Cost Reduction: Faster processing times and lower energy use translate directly into cost savings. This could make advanced AI systems more affordable and accessible to a broader range of organizations and researchers.

- Consistency is Key: For businesses relying on AI for customer service, prompt caching ensures consistent responses to common queries, potentially improving customer satisfaction.

- Scaling New Heights: With cached responses handling the bulk of common interactions, AI systems can potentially manage a much larger volume of queries, opening doors to more widespread AI adoption.

Not Without Its Challenges

However, as with any breakthrough technology, prompt caching isn’t without its potential drawbacks.

“We need to approach this with cautious optimism,” warns Dr. Michael Lee, an AI ethics specialist at MIT. “While the benefits are clear, we must also consider the implications carefully.”

Some of the concerns raised by experts include:

- Freshness of Information: In a rapidly changing world, how do we ensure cached responses remain up-to-date and relevant?

- The Personal Touch: There’s a risk that over-reliance on caching could lead to more generic, less personalized interactions.

- Nuance and Context: Language is complex, and subtle differences in phrasing or context could be lost on a system heavily reliant on caching.

- Privacy Matters: The storage of frequently used prompts and responses raises questions about data privacy and security.

Looking Ahead

Despite these challenges, the mood in the AI community is one of excitement. Anthropic’s prompt caching technology represents a significant step forward in making AI systems more efficient and accessible.

As we move forward, it will be crucial to balance the benefits of this technology with careful consideration of its implications. With responsible development and implementation, prompt caching could usher in a new era of more responsive, efficient, and accessible AI systems.

The release of prompt caching is not just a technological advancement; it’s a glimpse into a future where AI becomes an even more integral part of our daily lives. As this technology evolves, it will undoubtedly spark further innovations and discussions in the ever-expanding field of artificial intelligence.