Introduction:

In a groundbreaking development, Liquid AI has recently unveiled its new Language Model Fine-tuning Liquid Foundation Models (LFM) based models, promising to revolutionize the tech industry and various applications. These models, designed to learn from vast amounts of data and then fine-tune their performance on specific tasks, are set to redefine the capabilities of AI systems. In this article, we will explore the potential impact of these models on the tech industry, their benefits, and how they stand out from other models in the field.

The Power of LFM-Based Models:

The Language Model Fine-tuning approach allows Liquid AI’s new models to learn from a large amount of data and then fine-tune their performance on specific tasks, making them more efficient and effective. This approach has several advantages over traditional AI models, including:

- Improved accuracy: By fine-tuning the models on specific tasks, Liquid AI’s LFM-based models can achieve higher accuracy in their predictions and outputs, leading to better results for users.

- Faster training: The LFM approach enables the models to learn from a large amount of data quickly, reducing the time required for training and allowing for faster deployment of AI systems.

- Greater adaptability: The fine-tuning process allows the models to adapt to new tasks and data, making them more versatile and capable of handling a wide range of applications.

Applications and Benefits:

The introduction of Liquid AI’s LFM-based models is expected to have a significant impact on the tech industry, with potential applications in various sectors, including:

- Natural Language Processing (NLP): The improved accuracy and adaptability of these models can lead to better NLP systems, enabling more effective communication between humans and AI systems.

- Machine Translation: The LFM approach can enhance the performance of machine translation systems, making them more accurate and efficient in translating between languages.

- Text Generation: The models can be used to generate high-quality, contextually relevant text for various applications, such as content creation, chatbots, and more.

- Sentiment Analysis: The improved accuracy of these models can lead to better sentiment analysis systems, helping businesses to understand customer opinions and preferences more effectively.

How LFM-Based Models Stand Out:

Liquid AI’s LFM-based models set themselves apart from other models in the field by offering several key advantages:

- Fine-tuning for specific tasks: The ability to fine-tune the models on specific tasks allows them to achieve higher accuracy and adaptability, making them more effective in various applications.

- Faster training and deployment: The LFM approach enables the models to learn from a large amount of data quickly, reducing the time required for training and allowing for faster deployment of AI systems.

- Greater versatility: The fine-tuning process allows the models to adapt to new tasks and data, making them more capable of handling a wide range of applications.

The Liquid Foundation Models:

The Liquid Foundation Models, a suite of pre-trained models developed by Liquid AI, serve as the foundation for the LFM-based models. These models are trained on a diverse range of data sources, enabling them to learn a wide variety of tasks and adapt to new situations. The Liquid Foundation Models provide a strong starting point for fine-tuning, allowing Liquid AI’s LFM-based models to achieve even greater accuracy and adaptability in their respective tasks.

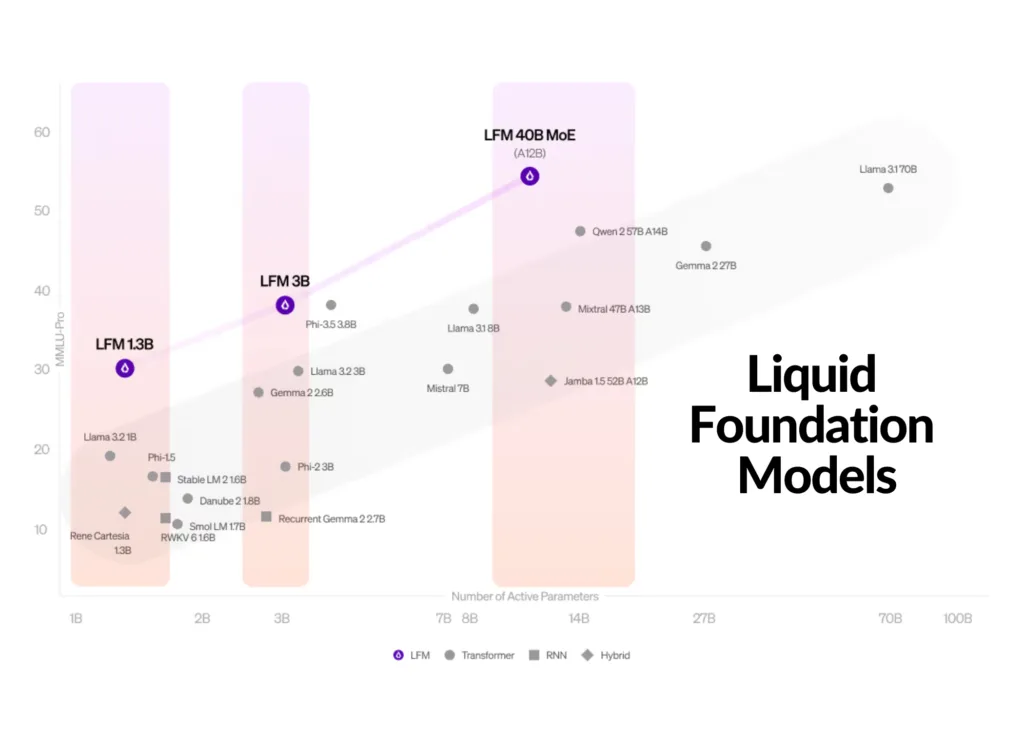

Liquid AI has introduced three new models in their first series of language models: the 1.3B model, the 3.1B model, and the 40.3B Mixture of Experts (MoE) model. These models are designed to cater to different use cases and requirements, offering a range of capabilities and performance levels.

The 1.3B model is a smaller, more lightweight model, suitable for applications with limited computational resources or specific, focused tasks. The 3.1B model offers a balance between performance and resource usage, making it a versatile choice for a wide range of applications. The 40.3B MoE model is a larger, more powerful model, designed for complex tasks and applications that require high performance and accuracy.

Trying Out the New Liquid Foundation Models:

Liquid AI’s LFM-based models are now available on Liquid Playground, Lambda (Chat UI and API), and Perplexity Labs. Soon, they will also be available on Cerebras Inference. The LFM stack is being optimized for NVIDIA, AMD, Qualcomm, Cerebras, and Apple hardware, ensuring compatibility and performance across a wide range of devices and platforms.

Conclusion:

Liquid AI’s new LFM-based models represent a significant leap forward in the field of AI, offering improved accuracy, adaptability, and efficiency. As these models continue to be fine-tuned and applied to various industries and applications, they have the potential to revolutionize the way we interact with AI systems and transform the tech industry as a whole. With their unique approach to language modeling and the support of the Liquid Foundation Models, Liquid AI is setting a new standard for AI development and paving the way for a more advanced and capable future.